How to define high degree constraint in Plonky3 and Stwo as a user

Plonky3:

A user must verify the following equation when determining the degree of the constraint (further explanation is provided in the next section):

let \(d\) be the degree of the constraint:

\(d-1\leq 2^{\text{log_blow_up_factor}}\)

If this condition is not met, an error will occur: lde.height() >= domain.size()

Stwo

The user must first define the maximum degree bound. If the constraints exceed this bound, there will be no explicit error indicating that the failure is due to a high-degree constraint. Instead, the error will appear as “constraint not satisfied” because the out-of-domain sample evaluation does not match.

set the maximum degree bound to be self.log_degree()+1 is equivalent to getting maximum 2 degree constraint in plonky3

Why the constraint degree affects the other parameters?

In both Plonky3 and Stwo, quotient polynomial evaluations are computed using an evaluate function. This function processes (Plonky3, Stwo) the data row by row to compute the quotient polynomial evaluation at a specific point. To understand this process, the following parameters must be clearly understood:

the degree of trace polynomial \(t\)

the degree of the original trace polynomial is \(t\), in other words, the size of the trace domain is \(t\), meaning there are \(t\) rows in the original trace

the degree of the constraint \(d\)

In Plonky3, the maximum degree of a constraint is determined by the highest power of the terms in the constraint polynomial relative to the trace polynomial.

for example: if the trace polynomials are \(t_i(x)\), they all have degree \(t\), and the constraint polynomial is \(t_1(x)t_2(x)t_3(x)t_4(x)-t_5(x)\), then this constraint has degree 4, meaning the constraint polynomial has degree \(4\cdot t\)

the degree of the quotient polynomial \((d-1)\cdot t\):

since \(\text{quotient polynomial}=\frac{\text{constraint polynomial}}{\text{zero fier}}\), zero fier has the same degree as the trace polynomial, the quotient polynomial has degree \((d-1)\cdot t\), meaning one needs at least \((d-1)\cdot t\) evaluations to uniquely determine the quotient polynomial. The evaluator function that processes row by row to compute quotient polynomial evaluations needs to evaluate the constraint in total \((d-1)\cdot t\) number of rows.

why in plonky3: \(d-1\leq 2^{\text{log_blow_up_factor}}\)?

When the evaluator evaluates the constraint, it goes through the trace polynomials that form this constraints, therefore each trace polynomial also needs to provides these \((d-1)\cdot t\) number of evaluations (rows). In plonky3, the evaluations of the trace polynomials are computed in the trace evaluation domain, the domain size is determined by the blow up factor, domain size should not be smaller than the number of evaluations that quotients polynomial needs.

trace evaluation domain size > quotient polynomial degree, therefore, the following equation need to holds

\(d-1\leq 2^{\text{log_blow_up_factor}}\)

However, in stwo’s implementation, the evaluations of the quotient polynomial do not come from the trace evaluation domain (the domain relies on the blow up factor), if the quotient polynomial degree exceeds the trace evaluation domain (size \(t \cdot 2^\text{log_blow_up_factor}\)), stwo will extend this domain to a bigger domain with the demand size, while this wouldn’t affect the blow up factor.

The cost of increasing constraint degree in Plonky3 and Stwo

Plonky3

Increasing the degree of the constraint leads to a higher blow-up factor, which expands the size of the trace evaluation domain for every column in the trace.

Stwo

Increasing the degree of the constraint does not affect the blow-up factor. However, each trace polynomial is extended to match the length of the quotient polynomial, allowing the evaluator to compute the quotient polynomial row by row.

Summary:

FFT/IFFT cost

Increasing the constraint degree requires additional FFT computation. The most significant computational cost when adding more columns also comes from FFT/IFFT operations, which involve three transformations:

- Trace domain evaluations → Trace polynomial

- Trace polynomial → Trace evaluation domain evaluations

In Stwo:

if blow up factor is not changed, Increasing the constraint degree requires additional FFT:

Trace polynomial → A larger evaluation domain than the trace domain.

However, this must be performed for every committed trace column.

one can also just increase the blow up factor to match the demand trace evaluation domain size.

In plonky3

If this reasoning holds, increasing the constraint degree by 1 in a proof system with \(n\) committed traces would have a computational cost roughly equivalent to adding \(\frac{n}{3}\) committed columns.

This applies to Stwo, considering only FFT/IFFT computation costs. Other factors, such as expanding the circle domain, are ignored. In Plonky3, increasing the constraint degree also requires adjusting the blow-up factor, making the cost more complex.

This is a quite rough comparison, the increased size, relatively to power of 2 domain size, these factors also need to be considered.

commitment cost

as the blow up factor increased, the evaluation domain is bigger, more data to be committed by Merkle tree, the commitment cost will be more as well.

Related code in Plonky3

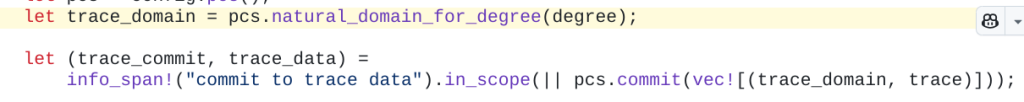

- step1: create trace domain, interpolate the trace

natural domain has the same size of the trace. However, in pcs.commit, the trace data will be in the trace evaluation domain, has blowup factor, as shown below

after commit the trace_data has the trace evaluated in the trace evaluation domain, with size \(\text{trace}\cdot 2^\text{blow_up_fact}\)

step 2: create quotient domain, size is trace size * constraint degree

step3: get trace poly evaluations on the quotient domain, here requires the trace poly evaluation domain has the same size with the quotient domain.

Proof size and blow-up factor

The security level is computed by

\(\text{log_blow_up } \cdot \text{num_queries} + \text{proof_of_work_bit}\)

set trace degree as \(2^t\), for a fixed security level, when increase the blowup factor by \(k\), query number become \(\frac{1}{k} \cdot \text{num_queries}\), the trace evaluation domain is increased from \(2^{t+\text{log_blow_up}}\)to \(2^{t + k\cdot \text{log_blow_up}}\)

the Merkle tree path proof size is linear to the depth of the Merkle tree, assume each query get a proof with size ( \(\text{depth}\cdot h\)) (plonky3 uses this merkle tree, may need more check here)

original proof size:

\((t+\text{log_blow_up}) \cdot h \cdot \text{query_number}\)

increase log_blow_up_factor by k, proof size become

\((t+k\cdot \text{log_blow_up} )\cdot h \cdot \frac{\text{query_number}}{k}\)

proof size will be reduced.

Nice!

It should be \(d – 1 \le 2^{blowup}\) though, right? For example, AFAIK SP1 uses a blow up factor of 2, so they have a degree bound of 3, while OpenVM has a blow up factor of 4, so they have a degree bound of 5.

About the cost in Plonky3: I agree that the Merkle paths are longer, but with an increased blow-up factor, the number of FRI queries can be reduced, see for example [this function](https://github.com/openvm-org/stark-backend/blob/227a0371a02bb183423d0cd6a0b361774b61f977/crates/stark-sdk/src/config/fri_params.rs#L53-L91). So I feel like the proof should even become smaller?

Lastly, I don’t understand why there is an *additional* FFT. Wouldn’t the second one (Trace polynomial → Trace evaluation domain evaluations) just become larger, because the trace evaluation domain doubles in size?

My understanding about comparing the SP1 config (blow up 2, degree bound 3) with the OpenVM config (blow up 4, degree bound 5) would be:

– The cost of the FFT (Trace domain evaluations → Trace polynomial) should be the same

– The IFFT (Trace polynomial → Trace evaluation domain evaluations) should be about twice as expensive, because there are twice as many evaluations

– The commitment cost should also be 2x more expensive, because the data increases by a factor of 2

This would be the case for the same trace, but of course, with a larger degree bound, you can save trace cells (e.g. $x^5$ can be computed directly using a degree bound of 5, but with a smaller degree bound needs extra witness cells to materialize smaller powers).

Thanks for the comment! Finally I have a real commenter, not just spammers ^^

Yes, the proof size should be reduced (I added more notes about the proof size in the last section). It also makes sense that simply increasing the blow-up factor to get a sufficiently large evaluation domain eliminates the need for an “additional FFT.” In Plonky3, the blow-up factor must always be large enough to match the quotient polynomial domain size. However, Stwo provides an option to perform an “additional FFT” to extend the evaluation domain size without increasing the blow-up factor. But now, this approach seems unnecessary maybe? if one already pays the extra cost for FFT, why not also reduce the proof size…

I also agree with the cost comparison between SP1 and OpenVM.

One thought: A higher-degree constraint requires a larger trace evaluation domain, but this applies only to the traces that actually contribute to the high-degree constraint. Instead of increasing the blow-up factor for all trace evaluation domains, why not just extend the necessary traces?

ah, if evaluation domains have different size, they will contain different points, then the quotient polynomial evaluations from different constraint will be evaluated at different points, then they cannot be combined linearly by randomness. got it.